Trying to read an article but been in front of the screen too long already? Your problems may be over thanks to the Skimmer app.

Designed for people with situational impairments, such as those commuting or resting tired eyes, the prototype allows users to skim ‘read’ complex documents, auditorily. And it was given the most rigorous of tests: the 99 bus at peak hour.

“I’m not saying it should...but in many ways, in terms of the time people are spending, [audio] is replacing reading.”

Assistant Professor Dongwook Yoon

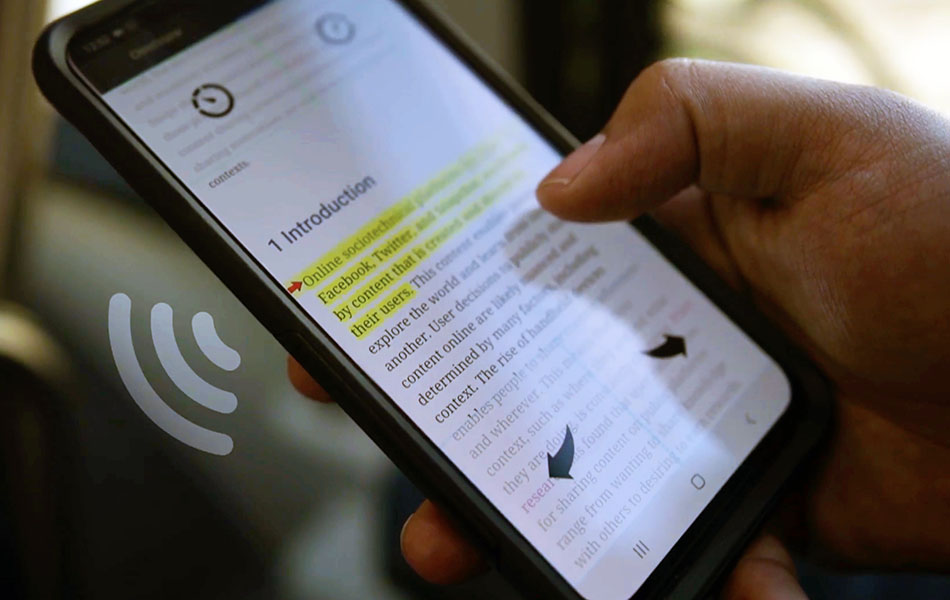

While readers can skim read complex documents, visually scanning a journal article and jumping to relevant sections to get its gist, podcasts and text-to-speech apps read from start to finish. Enter Skimmer. The app reads the text of a document aloud and lets users skip between chapter headings, discourse markers (such as 'to conclude'), and any referenced tables or figures, as well as adjust speech rate, by tapping the device’s screen. Skimmer also provides auditory and haptic feedback as non-visual navigation cues, for instance, employing different voices for chapter headings from general text.

The app is the work of UBC Computer Science alumni Taslim Arefin Khan, and Language Sciences members Professor Joanna McGrenere and Assistant Professor Dongwook Yoon (Computer Science), and was presented in a paper in April at the Conference on Human Factors in Computing Systems. The rising popularity of audio content, often consumed while performing another activity, such as commuting or exercising, provides an opportunity to leverage these windows of time for more intellectually stimulating activities, such as reading a document, says Yoon.

The prototype is also a step towards designing an app for users with visual impairments, says lead author Khan, who previously created a Braille-reading pen that helped expand the books available to children with visual impairments in Bangladesh. “The good thing about Human Computer Interaction [as a field] is you get to see whatever you implement and you can see the feedback, the smile on the user’s face.”

"Technology shouldn't force a dominant culture, language, etc. Good design should be a force that equalizes people."

Professor Joanna McGrenere

McGrenere hopes the app will be advanced to support users with visual impairments in the future. The project focused on that user population initially but pivoted to situationally impaired users, given people who are visually impaired are a rare resource as an app-testing group, whose time could be better spent once the design lessons had been learned from those who are only situationally impaired.

Some of these lessons came from an initial study the team conducted which found that 'eyes free' skimming did not work for participants who were sighted, as they tended to glance at the device's screen while listening. “We are visual creatures,” says Yoon. In addition, participants preferred visual anchors for the narration, such as highlighting of text currently being narrated, and that users needed the audio to pause when scrolling the text.

Using this study, and a survey of existing apps, the team identified 11 design guidelines for an eyes-reduced skimming app, and built Skimmer, a web-based prototype which reads ePubs. Khan then accompanied six participants on several buses (and often in the rain) to evaluate it. "We started getting a lot of looks and stares from people."

Participants largely were able to not look at the screen, supporting Skimmer's eyes-reduced design. Importantly, one participant experienced motion sickness with the comparison app, but not with Skimmer, Khan says. Five of the six said they would continue using the app if possible. While this is encouraging, the team stresses this is a prototype to demonstrate efficacy of design guidelines, and not a product. “Skimmer is an example app.”

An important theme in the work was supporting individual differences, such as the potential to allow users to customize discourse markers, navigation patterns, and narration speeds, says McGrenere. "People are different and the way they want to execute their task varies. The system should support these differences."

The work had been an amazing collaboration, says McGrenere, given the different areas of expertise the team brought, including her work in designing for people with impairments, and Yoon’s work with multimodal interface design. She and Yoon hope to continue the project to create a visual impairment-informed prototype with an interested grad student. "This project needs to go beyond eyes-reduced and next tackle usage that is entirely eyes-free, in order to achieve its full intentions."

View a video of the app here.